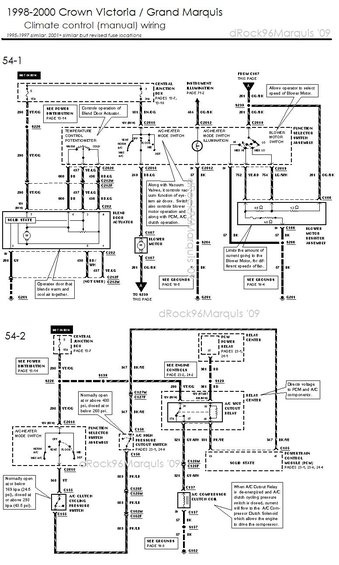

2002 Mercury Marquis Wiring Diagrams

- Category : Wiring Diagrams

- Post Date : January 27, 2026

2002 Mercury Marquis Wiring Diagrams

Diagram 2002 Mercury Marquis Wiring Diagrams

Download 2002 Mercury Marquis Wiring Diagrams

2 Building on @camo's answer, since you're looking to use the secret value outside Databricks, you can use the Databricks Python SDK to fetch the bytes representation of the secret value, then decode and print locally (or on any compute resource outside of Databricks).

EDIT: I got a message from Databricks' employee that currently (DBR 15.4 LTS) the parameter marker syntax is not supported in this scenario. It might work in the future versions. Original question:...

It's not possible, Databricks just scans entire output for occurences of secret values and replaces them with " [REDACTED]". It is helpless if you transform the value. For example, like you tried already, you could insert spaces between characters and that would reveal the value. You can use a trick with an invisible character for example Unicode invisible separator, which is encoded as ...

Method3: Using third party tool named DBFS Explorer DBFS Explorer was created as a quick way to upload and download files to the Databricks filesystem (DBFS). This will work with both AWS and Azure instances of Databricks. You will need to create a bearer token in the web interface in order to connect.

Installing multiple libraries 'permanently' on Databricks' cluster Asked 1 year, 11 months ago Modified 1 year, 11 months ago Viewed 5k times

Is databricks designed for such use cases or is a better approach to copy this table (gold layer) in an operational database such as azure sql db after the transformations are done in pyspark via databricks? What are the cons of this approach? One would be the databricks cluster should be up and running all time i.e. use interactive cluster.

Databricks is smart and all, but how do you identify the path of your current notebook? The guide on the website does not help. It suggests: %scala dbutils.notebook.getContext.notebookPath res1: ...

Can someone let me know what the equivalent of the following CREATE VIEW in Databricks SQL is in PySpark? CREATE OR REPLACE VIEW myview as select last_day(add_months(current_date(), 1)) Can someo...

Use Databricks Datetime Patterns. According to SparkSQL documentation on the Databricks website, you can use datetime patterns specific to Databricks to convert to and from date columns.

By default, Azure Databricks does not have ODBC Driver installed. Run the following commands in a single cell to install MS SQL ODBC Driver on Azure Databricks cluster.

3 way switch,3 way switch wiring,3 way switch wiring diagram pdf,3 way wiring diagram,3way switch wiring diagram,4 prong dryer outlet wiring diagram,4 prong trailer wiring diagram,6 way trailer wiring diagram,7 pin trailer wiring diagram with brakes,7 pin wiring diagram,alternator wiring diagram,amp wiring diagram,automotive lighting,cable harness,chevrolet,diagram,dodge,doorbell wiring diagram,ecobee wiring diagram,electric motor,electrical connector,electrical wiring,electrical wiring diagram,ford,fuse,honeywell thermostat wiring diagram,ignition system,kenwood car stereo wiring diagram,light switch wiring diagram,lighting,motor wiring diagram,nest doorbell wiring diagram,nest hello wiring diagram,nest labs,nest thermostat,nest thermostat wiring diagram,phone connector,pin,pioneer wiring diagram,plug wiring diagram,pump,radio,radio wiring diagram,relay,relay wiring diagram,resistor,rj45 wiring diagram,schematic,semi-trailer truck,sensor,seven pin trailer wiring diagram,speaker wiring diagram,starter wiring diagram,stereo wiring diagram,stereophonic sound,strat wiring diagram,switch,switch wiring diagram,telecaster wiring diagram,thermostat wiring,thermostat wiring diagram,trailer brake controller,trailer plug wiring diagram,trailer wiring diagram,user guide,wire,wire diagram,wiring diagram,wiring diagram 3 way switch,wiring harness